Image Storage Solutions for SaaS: Local vs S3 vs CDN - Complete Implementation Guide

Choosing the right image storage solution is critical for SaaS applications. This guide provides detailed implementation strategies for local storage, AWS S3, and CDN solutions, with specific code examples for React and Next.js applications.

Image Storage Strategy Overview

Why Storage Strategy Matters

Performance Impact:

- Load Times: Affects user experience and SEO

- Bandwidth Costs: Impacts operational expenses

- Scalability: Determines growth potential

- Reliability: Affects application availability

Business Considerations:

- Development Speed: Time to market

- Operational Costs: Storage and bandwidth pricing

- Compliance: Data residency requirements

- Backup and Recovery: Data protection needs

Technical Factors:

- File Size Management: Handling large images

- Concurrent Access: Multiple users uploading/downloading

- Global Distribution: Serving users worldwide

- Image Processing: Resizing, compression, format conversion

Local Storage Implementation

When to Use Local Storage

Ideal Scenarios:

- MVP Development: Quick prototyping and testing

- Small Applications: Limited user base and storage needs

- Development Environment: Local testing and debugging

- Compliance Requirements: Data must stay on premises

Limitations:

- Scalability: Limited server disk space

- Performance: Single point of failure

- Backup: Manual backup processes required

- Geographic Distribution: No edge locations

React Local Storage Implementation

Directory Structure:

src/

├── components/

│ ├── ImageUpload.jsx

│ ├── ImageDisplay.jsx

│ └── ImageGallery.jsx

├── services/

│ ├── imageService.js

│ └── storageService.js

├── utils/

│ ├── imageUtils.js

│ └── fileUtils.js

public/

├── uploads/

│ ├── profiles/

│ ├── products/

│ └── thumbnails/

File Upload Component:

import React, { useState } from 'react';

import { uploadImage } from '../services/imageService';

function ImageUpload({ onUploadSuccess, category = 'general' }) {

const [uploading, setUploading] = useState(false);

const [preview, setPreview] = useState(null);

const handleFileSelect = (event) => {

const file = event.target.files[0];

if (file) {

// Validate file type

const validTypes = ['image/jpeg', 'image/png', 'image/webp'];

if (!validTypes.includes(file.type)) {

alert('Please select a valid image file (JPEG, PNG, or WebP)');

return;

}

// Validate file size (5MB limit)

if (file.size > 5 * 1024 * 1024) {

alert('File size must be less than 5MB');

return;

}

// Generate preview

const reader = new FileReader();

reader.onload = (e) => setPreview(e.target.result);

reader.readAsDataURL(file);

}

};

const handleUpload = async (event) => {

const file = event.target.files[0];

if (!file) return;

setUploading(true);

try {

const formData = new FormData();

formData.append('image', file);

formData.append('category', category);

const response = await uploadImage(formData);

onUploadSuccess(response.data);

setPreview(null);

} catch (error) {

console.error('Upload failed:', error);

alert('Upload failed. Please try again.');

} finally {

setUploading(false);

}

};

return (

<div className="image-upload">

<input

type="file"

accept="image/*"

onChange={handleFileSelect}

disabled={uploading}

style={{ display: 'none' }}

id="image-upload"

/>

<label htmlFor="image-upload" className="upload-button">

{uploading ? 'Uploading...' : 'Select Image'}

</label>

{preview && (

<div className="preview">

<img src={preview} alt="Preview" style={{ maxWidth: '200px' }} />

</div>

)}

</div>

);

}

export default ImageUpload;

Image Service:

// services/imageService.js

const API_BASE_URL = process.env.REACT_APP_API_URL || 'http://localhost:3001';

export const uploadImage = async (formData) => {

const response = await fetch(`${API_BASE_URL}/api/images/upload`, {

method: 'POST',

body: formData,

headers: {

'Authorization': `Bearer ${localStorage.getItem('token')}`

}

});

if (!response.ok) {

throw new Error('Upload failed');

}

return response.json();

};

export const getImage = (imagePath) => {

return `${API_BASE_URL}/uploads/${imagePath}`;

};

export const deleteImage = async (imageId) => {

const response = await fetch(`${API_BASE_URL}/api/images/${imageId}`, {

method: 'DELETE',

headers: {

'Authorization': `Bearer ${localStorage.getItem('token')}`

}

});

if (!response.ok) {

throw new Error('Delete failed');

}

return response.json();

};

Express.js Backend:

// server.js

const express = require('express');

const multer = require('multer');

const path = require('path');

const fs = require('fs');

const sharp = require('sharp');

const app = express();

// Multer configuration

const storage = multer.diskStorage({

destination: (req, file, cb) => {

const category = req.body.category || 'general';

const uploadPath = path.join(__dirname, 'public/uploads', category);

// Create directory if it doesn't exist

if (!fs.existsSync(uploadPath)) {

fs.mkdirSync(uploadPath, { recursive: true });

}

cb(null, uploadPath);

},

filename: (req, file, cb) => {

const uniqueSuffix = Date.now() + '-' + Math.round(Math.random() * 1E9);

const filename = `${uniqueSuffix}${path.extname(file.originalname)}`;

cb(null, filename);

}

});

const upload = multer({

storage: storage,

limits: {

fileSize: 5 * 1024 * 1024 // 5MB limit

},

fileFilter: (req, file, cb) => {

const allowedTypes = ['image/jpeg', 'image/png', 'image/webp'];

if (allowedTypes.includes(file.mimetype)) {

cb(null, true);

} else {

cb(new Error('Invalid file type'), false);

}

}

});

// Upload endpoint

app.post('/api/images/upload', upload.single('image'), async (req, res) => {

try {

if (!req.file) {

return res.status(400).json({ error: 'No file uploaded' });

}

const originalPath = req.file.path;

const category = req.body.category || 'general';

// Generate thumbnails

const thumbnailPath = originalPath.replace(path.extname(originalPath), '_thumb.jpg');

await sharp(originalPath)

.resize(200, 200, { fit: 'cover' })

.jpeg({ quality: 80 })

.toFile(thumbnailPath);

// Generate optimized version

const optimizedPath = originalPath.replace(path.extname(originalPath), '_optimized.jpg');

await sharp(originalPath)

.resize(800, 600, { fit: 'inside', withoutEnlargement: true })

.jpeg({ quality: 85 })

.toFile(optimizedPath);

const response = {

id: Date.now().toString(),

originalName: req.file.originalname,

filename: req.file.filename,

category: category,

paths: {

original: `/uploads/${category}/${req.file.filename}`,

optimized: `/uploads/${category}/${path.basename(optimizedPath)}`,

thumbnail: `/uploads/${category}/${path.basename(thumbnailPath)}`

},

size: req.file.size,

mimetype: req.file.mimetype,

uploadedAt: new Date().toISOString()

};

res.json(response);

} catch (error) {

console.error('Upload error:', error);

res.status(500).json({ error: 'Upload failed' });

}

});

// Serve static files

app.use('/uploads', express.static(path.join(__dirname, 'public/uploads')));

app.listen(3001, () => {

console.log('Server running on port 3001');

});

Next.js Local Storage Implementation

API Route for Upload:

// pages/api/upload.js

import formidable from 'formidable';

import fs from 'fs';

import path from 'path';

import sharp from 'sharp';

export const config = {

api: {

bodyParser: false,

},

};

export default async function handler(req, res) {

if (req.method !== 'POST') {

return res.status(405).json({ error: 'Method not allowed' });

}

const form = formidable({

uploadDir: path.join(process.cwd(), 'public/uploads'),

keepExtensions: true,

maxFileSize: 5 * 1024 * 1024, // 5MB

});

try {

const [fields, files] = await form.parse(req);

const file = files.image[0];

if (!file) {

return res.status(400).json({ error: 'No file uploaded' });

}

// Generate unique filename

const uniqueSuffix = Date.now() + '-' + Math.round(Math.random() * 1E9);

const extension = path.extname(file.originalFilename || '');

const filename = `${uniqueSuffix}${extension}`;

const category = fields.category?.[0] || 'general';

// Create category directory

const categoryDir = path.join(process.cwd(), 'public/uploads', category);

if (!fs.existsSync(categoryDir)) {

fs.mkdirSync(categoryDir, { recursive: true });

}

// Move file to category directory

const finalPath = path.join(categoryDir, filename);

fs.renameSync(file.filepath, finalPath);

// Generate thumbnails and optimized versions

const thumbnailPath = path.join(categoryDir, `thumb_${filename}`);

const optimizedPath = path.join(categoryDir, `opt_${filename}`);

await sharp(finalPath)

.resize(200, 200, { fit: 'cover' })

.jpeg({ quality: 80 })

.toFile(thumbnailPath);

await sharp(finalPath)

.resize(800, 600, { fit: 'inside', withoutEnlargement: true })

.jpeg({ quality: 85 })

.toFile(optimizedPath);

const response = {

id: uniqueSuffix,

filename: filename,

category: category,

paths: {

original: `/uploads/${category}/${filename}`,

optimized: `/uploads/${category}/opt_${filename}`,

thumbnail: `/uploads/${category}/thumb_${filename}`

},

size: file.size,

uploadedAt: new Date().toISOString()

};

res.json(response);

} catch (error) {

console.error('Upload error:', error);

res.status(500).json({ error: 'Upload failed' });

}

}

Image Component with Local Storage:

// components/OptimizedImage.jsx

import Image from 'next/image';

import { useState } from 'react';

function OptimizedImage({ src, alt, category, ...props }) {

const [imageError, setImageError] = useState(false);

const getImagePath = (quality = 'optimized') => {

const basePath = `/uploads/${category}`;

const filename = src.split('/').pop();

switch (quality) {

case 'thumbnail':

return `${basePath}/thumb_${filename}`;

case 'optimized':

return `${basePath}/opt_${filename}`;

default:

return `${basePath}/${filename}`;

}

};

if (imageError) {

return (

<div className="image-placeholder">

<span>Image not available</span>

</div>

);

}

return (

<Image

src={getImagePath('optimized')}

alt={alt}

onError={() => setImageError(true)}

{...props}

/>

);

}

export default OptimizedImage;

AWS S3 Implementation

When to Use S3

Ideal Scenarios:

- Scalable Applications: High storage requirements

- Global User Base: Worldwide content delivery

- High Availability: 99.999999999% durability

- Cost Optimization: Pay-as-you-use pricing

Benefits:

- Infinite Scalability: No storage limits

- High Durability: Data replicated across multiple facilities

- Global Accessibility: Access from anywhere

- Cost Effective: Low storage costs

S3 Setup and Configuration

AWS S3 Bucket Configuration:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicRead",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::your-saas-images/*"

}

]

}

CORS Configuration:

[

{

"AllowedHeaders": ["*"],

"AllowedMethods": ["GET", "PUT", "POST", "DELETE"],

"AllowedOrigins": ["https://yourdomain.com"],

"ExposeHeaders": ["ETag"],

"MaxAgeSeconds": 3600

}

]

Environment Variables:

# .env.local

AWS_ACCESS_KEY_ID=your_access_key

AWS_SECRET_ACCESS_KEY=your_secret_key

AWS_REGION=us-east-1

S3_BUCKET_NAME=your-saas-images

React S3 Implementation

S3 Service Class:

// services/s3Service.js

import AWS from 'aws-sdk';

class S3Service {

constructor() {

this.s3 = new AWS.S3({

accessKeyId: process.env.REACT_APP_AWS_ACCESS_KEY_ID,

secretAccessKey: process.env.REACT_APP_AWS_SECRET_ACCESS_KEY,

region: process.env.REACT_APP_AWS_REGION

});

this.bucketName = process.env.REACT_APP_S3_BUCKET_NAME;

}

async uploadFile(file, key, options = {}) {

const params = {

Bucket: this.bucketName,

Key: key,

Body: file,

ContentType: file.type,

ACL: 'public-read',

...options

};

try {

const result = await this.s3.upload(params).promise();

return result;

} catch (error) {

console.error('S3 upload error:', error);

throw error;

}

}

async deleteFile(key) {

const params = {

Bucket: this.bucketName,

Key: key

};

try {

await this.s3.deleteObject(params).promise();

return true;

} catch (error) {

console.error('S3 delete error:', error);

throw error;

}

}

getFileUrl(key) {

return `https://${this.bucketName}.s3.${process.env.REACT_APP_AWS_REGION}.amazonaws.com/${key}`;

}

async generatePresignedUrl(key, expires = 3600) {

const params = {

Bucket: this.bucketName,

Key: key,

Expires: expires

};

try {

const url = await this.s3.getSignedUrlPromise('getObject', params);

return url;

} catch (error) {

console.error('Presigned URL error:', error);

throw error;

}

}

}

export default new S3Service();

S3 Upload Component:

// components/S3ImageUpload.jsx

import React, { useState } from 'react';

import s3Service from '../services/s3Service';

import { v4 as uuidv4 } from 'uuid';

function S3ImageUpload({ onUploadSuccess, category = 'general' }) {

const [uploading, setUploading] = useState(false);

const [uploadProgress, setUploadProgress] = useState(0);

const handleFileUpload = async (event) => {

const file = event.target.files[0];

if (!file) return;

// Validate file

const validTypes = ['image/jpeg', 'image/png', 'image/webp'];

if (!validTypes.includes(file.type)) {

alert('Please select a valid image file');

return;

}

if (file.size > 10 * 1024 * 1024) { // 10MB limit

alert('File size must be less than 10MB');

return;

}

setUploading(true);

setUploadProgress(0);

try {

// Generate unique key

const fileExtension = file.name.split('.').pop();

const uniqueKey = `${category}/${uuidv4()}.${fileExtension}`;

// Upload to S3

const result = await s3Service.uploadFile(file, uniqueKey, {

Metadata: {

originalName: file.name,

category: category,

uploadedAt: new Date().toISOString()

}

});

// Generate thumbnail (you might want to do this server-side)

const thumbnailKey = `${category}/thumbnails/${uuidv4()}.jpg`;

const thumbnailBlob = await generateThumbnail(file, 200, 200);

await s3Service.uploadFile(thumbnailBlob, thumbnailKey);

const imageData = {

id: uuidv4(),

key: uniqueKey,

thumbnailKey: thumbnailKey,

url: result.Location,

thumbnailUrl: s3Service.getFileUrl(thumbnailKey),

originalName: file.name,

size: file.size,

type: file.type,

category: category,

uploadedAt: new Date().toISOString()

};

onUploadSuccess(imageData);

} catch (error) {

console.error('Upload failed:', error);

alert('Upload failed. Please try again.');

} finally {

setUploading(false);

setUploadProgress(0);

}

};

const generateThumbnail = (file, width, height) => {

return new Promise((resolve) => {

const canvas = document.createElement('canvas');

const ctx = canvas.getContext('2d');

const img = new Image();

img.onload = () => {

canvas.width = width;

canvas.height = height;

// Calculate aspect ratio

const aspectRatio = img.width / img.height;

let drawWidth = width;

let drawHeight = height;

if (aspectRatio > 1) {

drawHeight = width / aspectRatio;

} else {

drawWidth = height * aspectRatio;

}

const offsetX = (width - drawWidth) / 2;

const offsetY = (height - drawHeight) / 2;

ctx.drawImage(img, offsetX, offsetY, drawWidth, drawHeight);

canvas.toBlob(resolve, 'image/jpeg', 0.8);

};

img.src = URL.createObjectURL(file);

});

};

return (

<div className="s3-upload">

<input

type="file"

accept="image/*"

onChange={handleFileUpload}

disabled={uploading}

style={{ display: 'none' }}

id="s3-upload"

/>

<label htmlFor="s3-upload" className="upload-button">

{uploading ? `Uploading... ${uploadProgress}%` : 'Upload to S3'}

</label>

{uploading && (

<div className="progress-bar">

<div

className="progress-fill"

style={{ width: `${uploadProgress}%` }}

/>

</div>

)}

</div>

);

}

export default S3ImageUpload;

Next.js S3 Implementation

Server-Side S3 Integration:

// pages/api/s3-upload.js

import AWS from 'aws-sdk';

import formidable from 'formidable';

import fs from 'fs';

import { v4 as uuidv4 } from 'uuid';

const s3 = new AWS.S3({

accessKeyId: process.env.AWS_ACCESS_KEY_ID,

secretAccessKey: process.env.AWS_SECRET_ACCESS_KEY,

region: process.env.AWS_REGION

});

export const config = {

api: {

bodyParser: false,

},

};

export default async function handler(req, res) {

if (req.method !== 'POST') {

return res.status(405).json({ error: 'Method not allowed' });

}

const form = formidable({

maxFileSize: 10 * 1024 * 1024, // 10MB

});

try {

const [fields, files] = await form.parse(req);

const file = files.image[0];

if (!file) {

return res.status(400).json({ error: 'No file uploaded' });

}

// Read file buffer

const fileBuffer = fs.readFileSync(file.filepath);

const fileExtension = file.originalFilename.split('.').pop();

const category = fields.category?.[0] || 'general';

const uniqueKey = `${category}/${uuidv4()}.${fileExtension}`;

// Upload to S3

const uploadParams = {

Bucket: process.env.S3_BUCKET_NAME,

Key: uniqueKey,

Body: fileBuffer,

ContentType: file.mimetype,

ACL: 'public-read',

Metadata: {

originalName: file.originalFilename,

category: category,

uploadedAt: new Date().toISOString()

}

};

const result = await s3.upload(uploadParams).promise();

// Generate thumbnail using Sharp (server-side)

const sharp = require('sharp');

const thumbnailBuffer = await sharp(fileBuffer)

.resize(200, 200, { fit: 'cover' })

.jpeg({ quality: 80 })

.toBuffer();

const thumbnailKey = `${category}/thumbnails/${uuidv4()}.jpg`;

await s3.upload({

Bucket: process.env.S3_BUCKET_NAME,

Key: thumbnailKey,

Body: thumbnailBuffer,

ContentType: 'image/jpeg',

ACL: 'public-read'

}).promise();

// Clean up temporary file

fs.unlinkSync(file.filepath);

const response = {

id: uuidv4(),

key: uniqueKey,

thumbnailKey: thumbnailKey,

url: result.Location,

thumbnailUrl: `https://${process.env.S3_BUCKET_NAME}.s3.${process.env.AWS_REGION}.amazonaws.com/${thumbnailKey}`,

originalName: file.originalFilename,

size: file.size,

type: file.mimetype,

category: category,

uploadedAt: new Date().toISOString()

};

res.json(response);

} catch (error) {

console.error('S3 upload error:', error);

res.status(500).json({ error: 'Upload failed' });

}

}

S3 Image Component:

// components/S3Image.jsx

import Image from 'next/image';

import { useState } from 'react';

function S3Image({

src,

alt,

width,

height,

quality = 'optimized',

fallback = '/placeholder.jpg',

...props

}) {

const [imageError, setImageError] = useState(false);

const [loading, setLoading] = useState(true);

const getImageUrl = () => {

if (imageError) return fallback;

// If src is already a full URL, return it

if (src.startsWith('http')) return src;

// Construct S3 URL

const bucketName = process.env.NEXT_PUBLIC_S3_BUCKET_NAME;

const region = process.env.NEXT_PUBLIC_AWS_REGION;

return `https://${bucketName}.s3.${region}.amazonaws.com/${src}`;

};

return (

<div className="s3-image-container">

{loading && (

<div className="image-skeleton">

<div className="skeleton-placeholder" />

</div>

)}

<Image

src={getImageUrl()}

alt={alt}

width={width}

height={height}

onError={() => setImageError(true)}

onLoad={() => setLoading(false)}

style={{ display: loading ? 'none' : 'block' }}

{...props}

/>

</div>

);

}

export default S3Image;

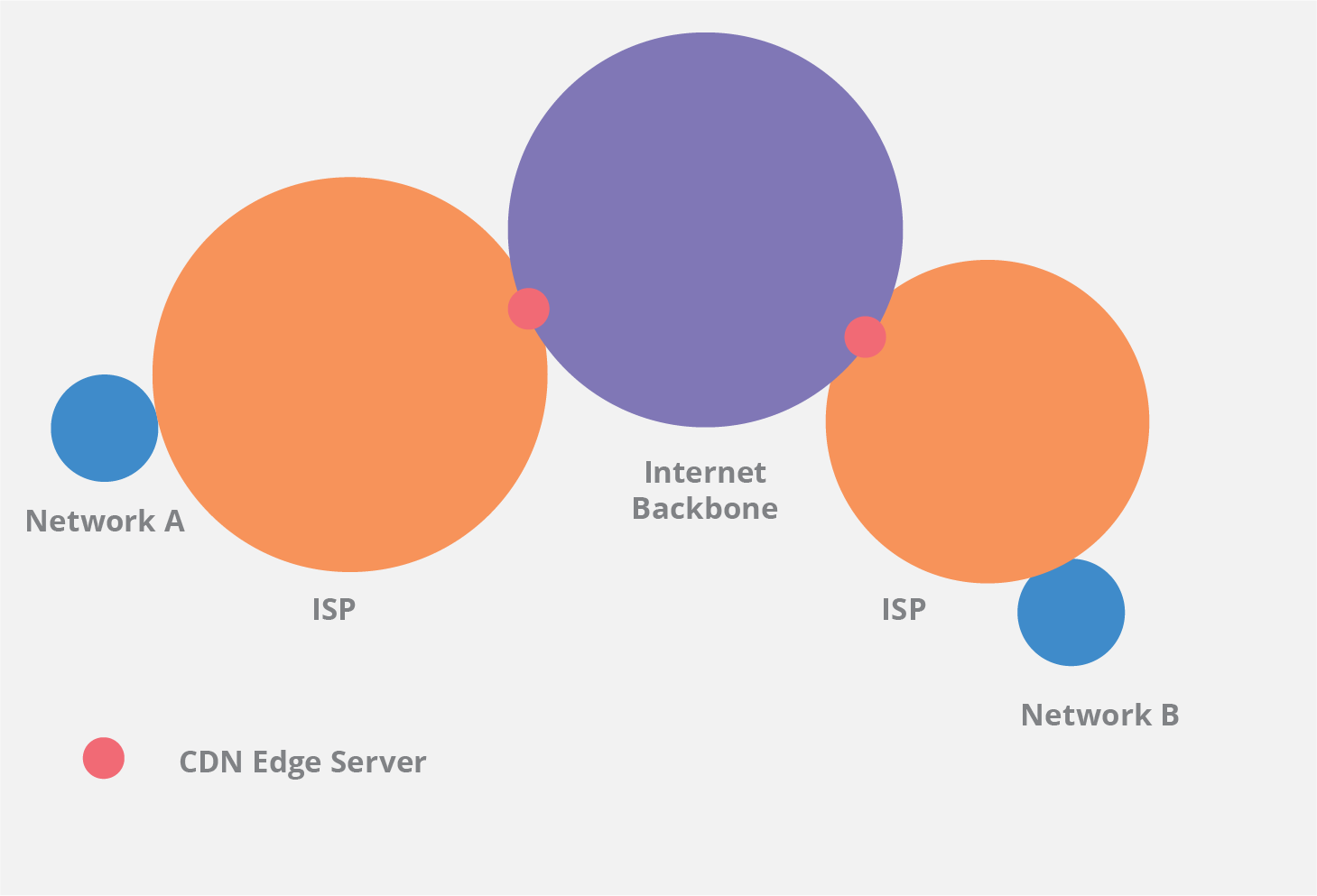

CDN Implementation

When to Use CDN

Ideal Scenarios:

- Global User Base: Users across different continents

- High Traffic: Many concurrent users

- Performance Critical: Sub-second load times required

- Mobile Users: Optimized mobile delivery

Benefits:

- Faster Load Times: Edge locations closer to users

- Reduced Bandwidth: Compressed and optimized delivery

- Better User Experience: Faster perceived performance

- SEO Benefits: Improved Core Web Vitals

CloudFront with S3 Implementation

CloudFront Configuration:

// aws-config.js

const cloudfrontConfig = {

distributionId: process.env.CLOUDFRONT_DISTRIBUTION_ID,

domainName: process.env.CLOUDFRONT_DOMAIN_NAME,

origins: [

{

domainName: `${process.env.S3_BUCKET_NAME}.s3.${process.env.AWS_REGION}.amazonaws.com`,

originPath: '/images'

}

],

defaultCacheBehavior: {

targetOriginId: 'S3-origin',

viewerProtocolPolicy: 'redirect-to-https',

cachePolicyId: '4135ea2d-6df8-44a3-9df3-4b5a84be39ad', // Managed-CachingOptimized

compress: true

}

};

export default cloudfrontConfig;

CDN Service Class:

// services/cdnService.js

class CDNService {

constructor() {

this.cloudFrontDomain = process.env.REACT_APP_CLOUDFRONT_DOMAIN;

this.s3BucketName = process.env.REACT_APP_S3_BUCKET_NAME;

this.region = process.env.REACT_APP_AWS_REGION;

}

getOptimizedUrl(key, options = {}) {

const {

width,

height,

quality = 85,

format = 'auto',

resize = 'cover'

} = options;

let url = `https://${this.cloudFrontDomain}/${key}`;

// If using AWS Lambda@Edge for image optimization

if (width || height) {

const params = new URLSearchParams();

if (width) params.append('w', width);

if (height) params.append('h', height);

if (quality !== 85) params.append('q', quality);

if (format !== 'auto') params.append('f', format);

if (resize !== 'cover') params.append('fit', resize);

url += `?${params.toString()}`;

}

return url;

}

getResponsiveImageSet(key, sizes = [400, 800, 1200]) {

return sizes.map(size => ({

src: this.getOptimizedUrl(key, { width: size }),

width: size

}));

}

preloadImage(key, options = {}) {

const link = document.createElement('link');

link.rel = 'preload';

link.as = 'image';

link.href = this.getOptimizedUrl(key, options);

document.head.appendChild(link);

}

}

export default new CDNService();

Responsive CDN Image Component:

// components/CDNImage.jsx

import { useState, useEffect } from 'react';

import cdnService from '../services/cdnService';

function CDNImage({

src,

alt,

responsive = true,

lazy = true,

quality = 85,

...props

}) {

const [loaded, setLoaded] = useState(false);

const [error, setError] = useState(false);

useEffect(() => {

if (!lazy) {

cdnService.preloadImage(src, { quality });

}

}, [src, lazy, quality]);

const getSrcSet = () => {

if (!responsive) return undefined;

const sizes = [400, 800, 1200, 1600];

return sizes

.map(size => `${cdnService.getOptimizedUrl(src, { width: size, quality })} ${size}w`)

.join(', ');

};

const getSizes = () => {

if (!responsive) return undefined;

return '(max-width: 400px) 400px, (max-width: 800px) 800px, (max-width: 1200px) 1200px, 1600px';

};

if (error) {

return (

<div className="image-error">

<span>Failed to load image</span>

</div>

);

}

return (

<div className="cdn-image-container">

<img

src={cdnService.getOptimizedUrl(src, { quality })}

srcSet={getSrcSet()}

sizes={getSizes()}

alt={alt}

loading={lazy ? 'lazy' : 'eager'}

onLoad={() => setLoaded(true)}

onError={() => setError(true)}

style={{

opacity: loaded ? 1 : 0,

transition: 'opacity 0.3s ease'

}}

{...props}

/>

</div>

);

}

export default CDNImage;

Performance Optimization Strategies

Image Optimization Pipeline

Automated Optimization Workflow:

// utils/imageOptimizer.js

import sharp from 'sharp';

class ImageOptimizer {

async processImage(buffer, options = {}) {

const {

width,

height,

quality = 85,

format = 'jpeg',

progressive = true

} = options;

let pipeline = sharp(buffer);

// Resize if dimensions provided

if (width || height) {

pipeline = pipeline.resize(width, height, {

fit: 'inside',

withoutEnlargement: true

});

}

// Apply format-specific optimizations

switch (format) {

case 'jpeg':

pipeline = pipeline.jpeg({

quality,

progressive,

mozjpeg: true

});

break;

case 'png':

pipeline = pipeline.png({

quality,

progressive: true

});

break;

case 'webp':

pipeline = pipeline.webp({

quality,

effort: 6

});

break;

default:

pipeline = pipeline.jpeg({ quality, progressive });

}

return await pipeline.toBuffer();

}

async generateVariants(buffer, variants = []) {

const results = {};

for (const variant of variants) {

const optimized = await this.processImage(buffer, variant);

results[variant.name] = optimized;

}

return results;

}

}

export default new ImageOptimizer();

Build-Time Optimization:

// scripts/optimizeImages.js

const fs = require('fs');

const path = require('path');

const sharp = require('sharp');

const glob = require('glob');

async function optimizeImages() {

const imageFiles = glob.sync('public/uploads/**/*.{jpg,jpeg,png}');

for (const file of imageFiles) {

const buffer = fs.readFileSync(file);

const optimized = await sharp(buffer)

.resize(1200, null, { withoutEnlargement: true })

.jpeg({ quality: 85, progressive: true })

.toBuffer();

fs.writeFileSync(file, optimized);

console.log(`Optimized: ${file}`);

}

}

optimizeImages().catch(console.error);

Caching Strategies

Browser Caching Headers:

// next.config.js

module.exports = {

async headers() {

return [

{

source: '/uploads/:path*',

headers: [

{

key: 'Cache-Control',

value: 'public, max-age=31536000, immutable'

}

]

}

];

}

};

Service Worker Caching:

// sw.js

const CACHE_NAME = 'images-cache-v1';

const IMAGE_CACHE_URLS = [

'/uploads/',

'https://your-cdn.com/images/'

];

self.addEventListener('fetch', (event) => {

if (event.request.url.includes('/uploads/') ||

event.request.url.includes('your-cdn.com/images/')) {

event.respondWith(

caches.match(event.request).then((response) => {

return response || fetch(event.request).then((response) => {

const responseClone = response.clone();

caches.open(CACHE_NAME).then((cache) => {

cache.put(event.request, responseClone);

});

return response;

});

})

);

}

});

Cost Analysis and Optimization

Storage Cost Comparison

Local Storage Costs:

- Server Hardware: $100-500/month for dedicated servers

- Bandwidth: Variable based on traffic

- Maintenance: Developer time for maintenance

- Backup: Additional storage costs

S3 Storage Costs (as of 2025):

- Standard Storage: $0.023 per GB/month

- GET Requests: $0.0004 per 1,000 requests

- PUT Requests: $0.005 per 1,000 requests

- Data Transfer: $0.09 per GB (first 10TB)

CloudFront Costs:

- Data Transfer: $0.085 per GB (first 10TB)

- HTTP Requests: $0.0075 per 10,000 requests

- Origin Shield: $0.0100 per 10,000 requests

Cost Optimization Strategies

S3 Lifecycle Policies:

{

"Rules": [

{

"ID": "ImageLifecycle",

"Status": "Enabled",

"Transitions": [

{

"Days": 30,

"StorageClass": "STANDARD_IA"

},

{

"Days": 90,

"StorageClass": "GLACIER"

}

]

}

]

}

Intelligent Tiering:

// Automated cost optimization

const intelligentTieringConfig = {

Id: 'intelligent-tiering',

Status: 'Enabled',

OptionalFields: ['BucketKeyStatus'],

Tierings: [

{

Days: 1,

AccessTier: 'ARCHIVE_ACCESS'

},

{

Days: 90,

AccessTier: 'DEEP_ARCHIVE_ACCESS'

}

]

};

Security Best Practices

Access Control

S3 Bucket Policies:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "RestrictToApplication",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::ACCOUNT:role/YourAppRole"

},

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject"

],

"Resource": "arn:aws:s3:::your-bucket/*"

}

]

}

Presigned URLs for Secure Access:

// Secure upload with presigned URLs

async function getPresignedUploadUrl(key, contentType) {

const params = {

Bucket: process.env.S3_BUCKET_NAME,

Key: key,

ContentType: contentType,

Expires: 300, // 5 minutes

ACL: 'public-read'

};

return s3.getSignedUrlPromise('putObject', params);

}

// Usage

const uploadUrl = await getPresignedUploadUrl(

'user-uploads/profile-pic.jpg',

'image/jpeg'

);

Input Validation

File Type Validation:

const ALLOWED_TYPES = ['image/jpeg', 'image/png', 'image/webp'];

const MAX_FILE_SIZE = 10 * 1024 * 1024; // 10MB

function validateFile(file) {

if (!ALLOWED_TYPES.includes(file.type)) {

throw new Error('Invalid file type');

}

if (file.size > MAX_FILE_SIZE) {

throw new Error('File too large');

}

return true;

}

Image Content Validation:

// Validate image headers

function validateImageHeaders(buffer) {

const jpegMagic = Buffer.from([0xFF, 0xD8, 0xFF]);

const pngMagic = Buffer.from([0x89, 0x50, 0x4E, 0x47]);

if (buffer.subarray(0, 3).equals(jpegMagic) ||

buffer.subarray(0, 4).equals(pngMagic)) {

return true;

}

throw new Error('Invalid image format');

}

Monitoring and Analytics

Performance Monitoring

Image Load Time Tracking:

// Track image performance

function trackImagePerformance(src) {

const startTime = performance.now();

return new Promise((resolve, reject) => {

const img = new Image();

img.onload = () => {

const loadTime = performance.now() - startTime;

// Send to analytics

analytics.track('image_load', {

src,

loadTime,

size: img.naturalWidth * img.naturalHeight,

timestamp: new Date().toISOString()

});

resolve(img);

};

img.onerror = reject;

img.src = src;

});

}

Storage Usage Monitoring:

// Monitor S3 usage

async function getStorageMetrics() {

const cloudWatch = new AWS.CloudWatch();

const params = {

MetricName: 'BucketSizeBytes',

Namespace: 'AWS/S3',

Dimensions: [

{

Name: 'BucketName',

Value: process.env.S3_BUCKET_NAME

},

{

Name: 'StorageType',

Value: 'StandardStorage'

}

],

StartTime: new Date(Date.now() - 24 * 60 * 60 * 1000),

EndTime: new Date(),

Period: 3600,

Statistics: ['Average']

};

const data = await cloudWatch.getMetricStatistics(params).promise();

return data.Datapoints;

}

Error Tracking

Image Load Error Monitoring:

// Global image error handler

document.addEventListener('error', (e) => {

if (e.target.tagName === 'IMG') {

console.error('Image load failed:', e.target.src);

// Send to error tracking

errorTracker.captureException(new Error('Image load failed'), {

extra: {

src: e.target.src,

alt: e.target.alt,

timestamp: new Date().toISOString()

}

});

}

}, true);

Conclusion

Choosing the right image storage solution depends on your specific needs:

Use Local Storage When:

- Building MVP or small applications

- Limited budget and simple requirements

- Compliance requires on-premises storage

- Simple upload/download functionality needed

Use S3 When:

- Need scalable, reliable storage

- Global user base with varying loads

- Want to focus on core business logic

- Require advanced features like versioning

Use CDN When:

- Performance is critical

- Global user base

- High traffic volumes

- Want to optimize Core Web Vitals

Implementation Recommendations:

- Start Simple: Begin with local storage for MVP

- Scale Gradually: Move to S3 as you grow

- Add CDN: Implement when performance matters

- Monitor Always: Track performance and costs

- Optimize Continuously: Regular audits and improvements

The key is to match your storage solution to your current needs while planning for future growth. Each solution has its place in the SaaS development lifecycle.

Ready to implement the perfect image storage solution for your SaaS? Use our tools to optimize and manage your image workflow efficiently!